Currently Empty: $0.00

Web Scraping is the process of regularly pulling data from specific websites and is an automatable process.

In the digital age, data plays a critical role in the business world, and web scraping is an effective way to collect this data.

This technique makes it possible to obtain structured or unstructured data using programming languages and various tools.

Basics of Web Scraping

Web Scraping is the general name of the process of extracting data from web pages on the internet and is performed on markup languages such as HTML or XML. This approach is used to effectively obtain especially large data sets.

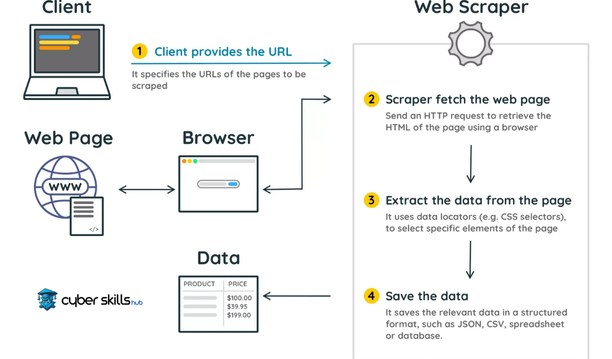

A Web Scraping process starts by first analyzing the source code of the target web page. Then, the algorithm is developed to extract the requested data with the help of specific tags, classes or IDs. This process is usually automated by bots written in programming languages, which reduces the need for manual labor.

The Web Scraping process is a type of data mining performed using automated scripts known as ”crawler“ or ”spider”. These robots systematically navigate web pages and collect the necessary information thanks to certain algorithms.

What is Web Scraping?

Web Scraping is the process of collecting data from websites on the Internet. It serves to obtain a structured dataset through programming languages and different libraries. It is usually preferred in search engines, market analysis and data analysis applications.

This technique allows the automatic extraction of data on a targeted web page according to certain criteria. The data extraction process is carried out programmatically using various methods, and the resulting data is processed for analysis.

Web Scraping facilitates big data analysis by saving time and resources.

The data collection process starts with analyzing the HTML structure of the web page, and appropriate data points are targeted using methods such as CSS selectors, XPath or Regex. Finally, it is ensured that these data are converted into a standard format and the necessary information is obtained in a systematic way. Web Scraping offers the opportunity to quickly reach data-based strategic decisions.

Working Principles of Scrapers

Scraper what is it, let’s talk about it first.

Scrapers are programs designed to collect data on websites. Like a web browser, it fetches the requested pages from the specified URLs and analyzes the HTML codes on these pages. As a result of these analyses, they extract the data structures determined by the users and make them operable. For example, the content of certain tags in HTML or data inside tables can be obtained using this method.

The data extraction process is dynamic and flexible.

When a Scraper analyzes the structure of a particular website – not only when extracting data, but also when processing that data – it must comply with a set of rules. For example, while observing a respectful behavior when accessing the site, he should also take care not to affect the performance of the website by controlling the frequency of access.

Advanced algorithms and AI technologies are being used.

In order for scrapers to work more effectively, advanced algorithms and artificial intelligence technologies may come into play from time to time. In this way, even data on sites with dynamic content or constantly changing structures can be collected with sensitivity and accuracy. as of 2024, it is projected that the above-mentioned technological developments will increase the efficiency and accuracy of scraper programs.

Attention should be paid to data security and ethical rules.

Finally, it is essential to comply with both data security and ethical standards during web scraping operations. It is an important principle to comply with the legislation, especially in terms of personal data protection, and to be respectful of the terms of use of websites. If these principles are not followed, the use of scraper may bring risks such as legal responsibilities and loss of reputation.

If you want to know more about this, ‘Python Education: The Best Beginner’s Guide to Specialize‘ you can review our article.

Web Scraping Tools and Technologies

Web scraping uses specially designed tools and technologies to collect data. These tools can first analyze the HTML and CSS structures of different websites and extract the necessary information. Among the technologies used are popular Python libraries such as BeautifulSoup and Scrapy. These libraries have a large user and developer community due to their open source code.

Automation tools such as Selenium, which can process dynamic content created with various web technologies, especially JavaScript, also play an active role in the data collection process. The suitability of the technology used for its purpose is of critical importance in terms of the speed and quality of data collection. These tools are used to obtain detailed and structured data even from complex web pages and may require advanced programming skills.

The need for sensitive data collection and compliance with ethical standards has increased the importance of web scraping tools as well as the methodologies used. Collecting data from all over the world, experts focus on the continuous development of these technologies and methods for sustainable and safe web scraping applications.

For more details about web scraping technologies ‘The Best Cyber Security Tools: Practical and Reliable Solutions‘ you can read our article.

Popular Web Scraping Tools

A popular tool used in the data scraping process is Octoparse; thanks to its user-friendly interface, web scraping operations can be performed in a visual environment without the need for coding knowledge.

Especially used in large-scale data collection projects Import.io , provides a comprehensive platform that allows users to pull data from different sources, analyze this data, and publish it as an API. It provides effective solutions for both local and global projects with multi-language support, including Turkish. Import.io , it also helps to improve the scraping process with additional services such as data verification and cleaning.

ParseHub is also another tool with advanced features for attracting data from dynamic and AJAX-supported sites. With its simple to use interface and powerful commands, it allows users to capture data quickly and effectively. With the help of artificial intelligence, it also has the ability to recognize the data on web pages and act accordingly.

Kimono Labs, on the other hand, offers the most efficient opportunities especially for users who do API-oriented web scraping work. The ability to create an API facilitates the collection and integration of data in real time. With its intelligent adaptations and scheduling features, Kimono Labs has become a preferred tool in strategic data collection studies by increasing automation and efficiency.

Programming Languages and Libraries

Web scraping is mainly based on Python, JavaScript (Node.js), can be performed with languages such as Ruby. These languages offer comprehensive libraries and frameworks for web scraping demonstration purposes.

Python is one of the most widely used languages for web scraping. Thanks to its powerful libraries such as Beautiful Soup and Scrapy, it becomes extremely easy to process and parse HTML and XML files. These libraries have functions that simplify and speed up data collection processes.

JavaScript and especially Node.the js environment is a popular way to do web scraping with modern libraries such as Cheerio and Puppeteer. Cheerio allows you to perform DOM manipulation quickly and efficiently with a jQuery-like synchronous operation. Puppeteer, on the other hand, is preferred for pages with dynamic content and browser-based automation operations.

The Nokogiri library for the Ruby language offers the ability to work comfortably on XML and HTML; while the Mechanize library attracts attention with its functions such as session management and form submission. These libraries ensure the object-oriented and flexible structure of Ruby, so that it can be effectively integrated into data scraping processes.

The unique features of each language and library have the capacity to serve different needs and purposes in web scraping projects. During the training process, it is important to take this diversity into account and choose the most appropriate tools that meet the project requirements.

Web Scraping Process

Web scraping is the process of collecting data from a specific website and is usually performed using programmatic methods. These methods allow the systematic extraction of data from the target site. First of all, it is necessary to understand the structure of the target site and determine which data will be collected.

After that, the configuration of requests (requests) required to extract data from a specific website and the parsing of data (parsing) operations are performed. The parsing process is usually performed by analyzing the DOM (Document Object Model) structure using tags (tags), classes (classes) and IDs. This process can be made more efficient by using appropriate web scraping tools and libraries.

Finally, the storage and analysis of the obtained data is started. The obtained raw data is saved to a database or file in a workable format and then evaluated using various analysis techniques. This stage has a critical importance in making sense of the collected data and its inclusion in decision-making processes.

Determination of the Data Collection Strategy

Determining a strategy is a critical step in the data collection process.

The determination of the strategy should be made in accordance with the purpose and requirements of the project. Before the data collection process with web scraping, a comprehensive planning should be made, including which data will be collected, how this data will be processed, and how it will be analyzed afterwards. This planning should be carried out carefully as it will directly affect the success of the project.

It is embodied after the selection of the correct data is made.

The planning stage is a process in which legal boundaries are also observed. Especially cyber security training within its framework, it should be remembered that during the execution of web scraping activities, first of all, it is necessary to act in accordance with the relevant laws, the terms of service of the sites and the ethical rules. This ensures that the project remains within legal limits and its reliability.

Another important issue that should be taken into account when determining the data collection strategy is the adequacy of resources. Analyzing the factors such as time, cost and human resources required to achieve the determined goals in advance will ensure that the project is completed efficiently and effectively. In this context, strategic planning is essential for long-term success.

Analyzing the Page Structure

Analyzing the page structure is one of the most critical steps in the web scraping process. It starts by examining the basic code structures of the relevant web page, such as HTML and CSS. This review phase forms the basis for the accuracy of the data collection process.

In addition, the DOM (Document Object Model) structure should be understood in detail. DOM allows a web page to be accessed and manipulated programmatically.

During the scraping process, it should be determined which parts of the page will be taken as data, how the elements will be selected, and in what format these data will be stored. All tags (tags), classes (classes) and IDs on the page are thoroughly examined, so a roadmap is drawn on how to capture which data. This is critical to maximize the accuracy and consistency of the data.

Compatibility with tools and libraries that can be used to increase the effectiveness of data collection should also be taken into account. In particular, providing access to certain HTML elements using XPath or CSS selectors is important in order to automate operations. The analyzed page structure affects both the accuracy of the selected data and the efficiency of the collection process, so a detailed review is required. A web scraping mechanism should be designed that will work Decently on different browsers and screen resolutions, and responsive design elements should not be ignored.

Legal and Ethical Dimension of Web Scraping

For more information about the legal limits of web scraping ‘What is Cyber Security Expertise?‘ you can find it in our article.

Although web scraping is technically a simple and effective method of data collection, it is a process that must be carried out without ignoring legal and ethical rules. Most of the data on the internet is subject to legal regulations such as copyrights and terms of use. Website owners usually specify in the robots.txt file to what extent and how this data can be used. Therefore, when performing web scraping operations, attention should be paid to the terms of use of the target site and the data protection laws of the country. In addition, in addition to the sensitivity required by regulations such as KVKK and GDPR in the protection of personal data, ethical standards should also be adhered to in order to prevent the use of collected data for malicious purposes.

Legal Limitations and Compliance

When performing web scraping operations, various legal restrictions must be observed. First of all, the terms of use of the targeted website should be reviewed in detail and these terms should be complied with.

robots.txt acting in accordance with the directives of his file is one of the basic conditions for staying on a legal footing.

Especially copyrights of course, unauthorized withdrawal of content may lead to intellectual property infringement. In this context, a detailed analysis should be made on whether the actions to be taken to prevent legal disputes are in accordance with the legal framework.

In addition, during data collection activities, national and international regulations for the processing of personal data, such as KVKK and GDPR as such, it should be observed with sensitivity. In order to ensure the protection of personal data, it is mandatory to comply with such regulations. On the other hand, the protection and secure storage of the collected data are under the responsibility of the data collectors. Sanctions that may be incurred in the event of detection of violations may also bring with them criminal responsibilities.

Ethical Web Scraping Applications

Data collection operations in the web scraping process should be carried out within the framework of ethical rules, frequent and intensive requests that will create excessive load should be avoided. Maintaining delicate balances is of critical importance in order to ensure the continuity of web resources.

Ethical behavior principles should be followed against the risks that may arise when accessing the private information of enterprises and individuals. This information should be protected in all cases.

Transparency should also be taken as a basis for the processing and use of the collected data, and the purpose, method and method of data collection should be clearly explained to the interested parties. In addition, there is no doubt that all legal regulations must be complied with in the sharing and distribution of the collected data.

Web scraping practices should not violate the privacy rights of individuals and should not negatively affect the user experience. In this context, the planning and implementation of web scraping activities in accordance with sustainable and ethical standards are steps taken to protect the trust in the sector and the free structure of the Internet. In addition, such activities should be carried out within the framework of open source ethics and legal responsibilities.

Frequently Asked Questions About Web Scraping

What is the use of web scraping?

Web scraping is a process that automatically collects and analyzes data on the Internet. In this process, certain web pages or websites are “scraped” using a web browser and valuable information is extracted for a specific purpose. So web scraping is an important tool for those who want to gain a competitive advantage in various sectors or take security measures.

What is data scraping, how is it done?

Data scraping is the process of automatically collecting and analyzing large amounts of data. This process is usually applied to unstructured data and is done for the purpose of exploring the data, finding patterns and obtaining valuable information. Data scraping methods are based on various data sources (websites, social media platforms, databases, etc.) provides access and scrapes data from these sources.

What does the scrape process mean?

The scrape process is a method used to automatically collect and pull data from web pages. This process analyzes the HTML or XML code of a website, extracts and parses the desired data according to a specific structure.

What does Scraper mean by software?

Scraper is a software tool that automatically extracts and extracts data from websites. Also called a web scraper, this software scans the content of a specific website and extracts the desired data. Scraper has a programmable structure and targets specific elements by analyzing the HTML or XML codes of web pages. For example, a scraper can collect product prices from an e-commerce site or headlines from a news site. In this way, users are saved from the trouble of manually researching and collecting this data.