Currently Empty: $0.00

Real-time file system analysis in Linux systems is critical for detecting threat indicators. This process involves examining digital traces, system logs, users, and file structures to uncover unauthorized access, malicious activities, or data breaches.

Live forensic file system analysis, which is often used in the early stages of incident response, plays a critical role in assessing and identifying potential security breaches. This process involves examining digital artifacts, system logs, users, and file structures to find evidence of unauthorized access, malicious activities, or data threats.

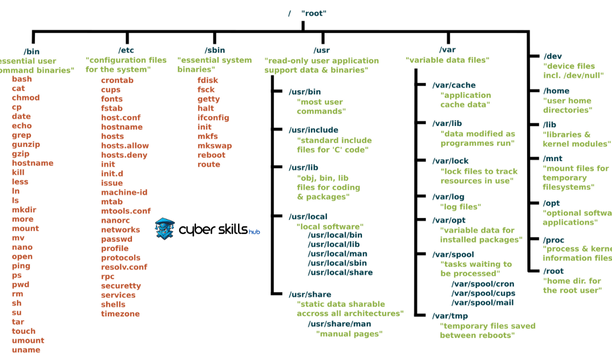

Introduction to the Linux File System

The Linux file system, with its extensive flexible structures and security mechanisms, is an important foundation for analyzing complex events. The structure of files, access permissions, and timestamps are critical factors that leave traces in the event of a security breach, so a thorough understanding of these elements is essential and forms the backbone of forensic investigation methodology.

Careful analysis by experts is critical for identifying atypical behavior in Linux environments. The fact that this analysis process is used to determine changes made to the system, file accesses, and permission settings, as well as footprints left behind during attacks, makes it indispensable.

Basic Commands and Tools

The basic commands used in Linux file system analysis are ls, chmod, chown, find, grep, and ps. For more information on the detailed usage of these commands and other important Linux commands, please refer to our article titled “Linux Commands: A Guide to Mastering Linux from Beginner to Advanced.” These commands perform functions such as listing files and directories, viewing permissions, searching for files, filtering content, and listing processes.

In Linux, every file and directory has a number, which carries valuable information about the file system.

The last and who commands are important tools for examining user activity and session information. These commands reveal which commands were used during an attacker’s intervention in the system and display session information.

Understanding the File Structure

In Linux file system analysis, it is vital to understand how the system is organized and where files are located. The basis of this structure is which users or groups can access each file and directory.

- /etc: Contains system-wide configuration files.

- /var/log: This is where system logs are stored, and it is critical for tracking events.

- /home or /users: Contains users’ personal files and directories.

- /usr: This is where application and system programs are usually located.

- /tmp: A directory where temporary files are stored and may contain exploiters during an attack.

Carefully examining permission structures and timestamps can provide definitive evidence of suspicious changes to a file.

The log files recorded by the operating system’s daemons and services provide essential data during attack detection and analysis. The timestamps stored in these files are used to create a timeline of events.

Traceable Structure and Monitoring

Every file and directory within the Linux operating system is subject to specific permissions; these permissions determine who can read, write, or execute files. These permissions, defined based on the file or directory’s owner, group, and other users, are managed using tools such as chmod, chown, and ls -l.

Monitoring services such as auditd, which are used to monitor activity on the system, keep detailed records of access to the system and permission changes, enabling abnormal activity to be detected quickly. For more information on monitoring and managing activity on the system and network, please refer to our article entitled “What is System and Network Expertise and How is it Achieved?”

File and Directory Permissions

File and directory permissions in the Linux file system form the basis of access control and are of great importance for security. These permissions are defined separately for the file owner, group members, and other users, and are expressed as read (r), write (w), and execute (x) rights.

Organizing permissions in a clear manner strengthens authorization controls. The ls -l command displays file permissions and plays a vital role in analysis. For more information on encryption and decryption techniques, which are critical for system security, please visit our article titled “Decryption: How to Crack Passwords Using the Best Methods.”

Being able to track changes to file permissions is critical for post-attack analysis. Changes made by the root user, in particular, can be indicators of potentially malicious activity. Changes to permissions on frequently accessed or modified files may indicate that these files have been tampered with by attackers.

On the other hand, the history of file permissions is important for detecting advanced attacks, as changes made by attackers to cover their tracks can be revealed through these records. Detecting sudden changes in a file’s metadata can indicate unauthorized access to the system or the presence of malicious software. This is achieved by using the stat command to examine file permissions, access, and modification times in detail. Auditd tools can also be used to monitor critical permission changes in the system.

SUID and SGID Bits

In Linux operating systems, one of the cornerstones of file access control mechanisms is the SUID (Set User ID) and SGID (Set Group ID) bits. These bits determine how security controls are applied to specific files and directories.

- SUID (Set User ID): When the SUID bit is set on a file, the permissions of the file’s owner are temporarily assumed when the file is executed.

- SGID (Set Group ID): When the SGID bit is set, the file or directory is processed with the permissions of the group owner when it is executed.

- File and Directory Security: SUID and SGID bits should be used with care to ensure system security, as these bits can lead to security vulnerabilities if misconfigured.

Removing or adding SUID or SGID bits from a file can be done using the chmod command. This operation is required by system administrators in situations that require special permissions.

In addition, careful examination of all SUID and SGID bit-set files on the system during the security review is critical to identifying unauthorized access or malicious software activity.

Important Log Files

Log records are vital for monitoring events and system activities in Linux systems. The /var/log directory, in particular, plays a central role in security analysis and the diagnosis of malicious activities.

Log files such as auth.log, kern.log, and syslog provide critical information for attack detection and system behavior investigation. These files contain detailed data about authentication attempts, user login attempts, and the status of system services.

Regular log analysis is the primary method for detecting suspicious events and establishing the chronological order of events. The logwatch, logcheck, and swatch commands are frequently used tools for this purpose.

Auth and Syslog Analysis

syslog, on the other hand, records a wide range of activities such as system services and kernel events. From a security perspective, the information contained in this file plays a key role in analyzing attacks or malfunctions targeting system services. Analysis of syslog helps paint a picture of when and how the system was misused and can help determine the direction of an investigation.

Finally, the integrity and reliability of timestamps are also important during these analyses. Attempts to manipulate the system clock may have been made to erase the attacker’s traces. Therefore, keeping log records synchronized with time servers (NTP) and reviewing the logs of tools such as chrony or similar tools that record any changes to the clock emerge as an effective method for detecting and analyzing the incident.

Daily Signs to Watch Out For

The documentation and recording of events that occur on Linux systems are collected in log files located in the /var/log directory. These files contain detailed records of system and application service activities and are of interest to information security professionals.

For example, the auth.log file contains important data related to authentication processes. This file is a valuable resource for tracking user logins and logouts, sudo command usage, and other authentication attempts. In the event of a suspected security breach, auth.log is one of the first logs to examine to detect traces of unauthorized access.

access.log and error.log log traffic information and errors encountered by web servers. In order to detect malicious actors’ attempts to attack web applications, it is critical to analyze the details of requests made via the HTTP protocol. The information contained in these files plays a vital role in investigating and preventing web-based attacks.

Finally, log files such as kern.log and dmesg contain messages related to the kernel and hardware. Critical problems such as system crashes or driver errors can be found in these log files. Analyzing the information in these log files is important for detecting malicious modules used by attackers.

Rootkit and Backdoor Detection

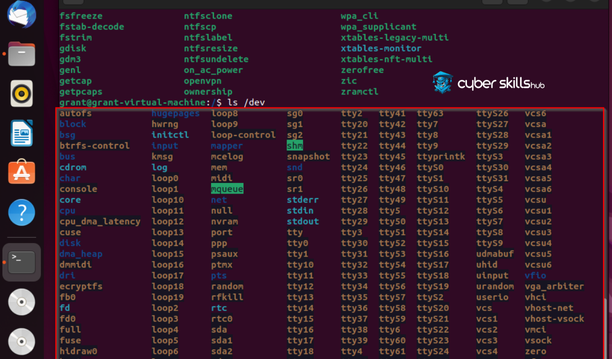

Rootkit and backdoor activities on Linux systems can often evade standard security mechanisms. For forensic techniques used in rootkit and backdoor detection and ways to specialize in this field, check out our content titled “Ways to Achieve Success in Forensic Computing Expertise Training.” Various security tools that monitor system calls can be used to detect these threats; for example, tools like chkrootkit or rkhunter can perform a deep scan of your system to uncover suspicious behavior. Additionally, modified system files and hidden processes should be thoroughly examined in the root directory (/root) and critical locations such as /bin, /sbin, /usr/bin, and /usr/sbin.

Software that checks system integrity, like AIDE and Tripwire, plays a key role in exposing attackers’ tracking activities by detecting changes in file permissions and contents. Any abnormalities may require quick and effective intervention from the system administrator.

Advanced Screening Tools

Advanced scanning tools are critical for Linux systems.

In Linux systems, advanced scanning tools have become an indispensable part of incident investigation processes. These tools must be capable of deciphering the secrets of the system in order to understand its current state in detail and track potential threats. In particular, detecting hidden files, permission changes, and anomalies is the first step.

Please protect your critical files.

These tools can perform detection and analysis using a structural analysis approach—and sometimes heuristic techniques—thereby uncovering even the most hidden threats. This functionality is important for identifying processes that have passed authentication but may be malicious.

The use of advanced scanning tools is an application that requires specialized knowledge and experience. We recommend reading our article, “Cybersecurity Training: Become an Expert in the Field,” to learn more about the tools and techniques used in cybersecurity and to specialize in this area. Our expertise in Linux system security is essential for developing a proactive defense against current threats and vulnerabilities as of 2024. In this regard, new developments in environment scanning and incident investigation tools will help us increase the effectiveness of our applications.

Analyzing Suspicious Processes and Connections

Suspicious processes running on Linux systems are one of the most obvious signs of cyber attacks. When analyzing processes, particular attention should be paid to the runtime, user ID, and connection points. First, the ps command should be used to quickly review the processes running on the system.

There may be foreign and untrusted applications running between processes. The top command is an effective tool for identifying such processes.

The netstat or ss commands are used to check the connections opened by running processes on the network. These commands show the listening ports and the source or destination of established connections and can reveal suspicious network activity. In addition, the lsof command can be used to detail which files a process is accessing and which files are open.

Advanced process analysis methods should be applied to track attackers and detect illegal activities. For example, we can use the strace command to track a process’s system calls; we can analyze process memory dumps using tools such as gdb or volatility. Process forensics not only identifies suspicious activities but also provides insights into the attack methodology and enables the collection of critical intelligence about the adversary’s capabilities. These processes facilitate a comprehensive threat assessment and enable the development of a precise response plan.

Frequently Asked Questions About Linux File System Analysis

Why is Linux file system analysis important?

Linux file system analysis is critical for detecting unauthorized access, malicious activity, and data breaches. This analysis involves examining digital artifacts such as system logs, users, and file structures to identify and prevent security breaches.

What are the basic commands used in Linux file system analysis?

Basic commands frequently used in Linux file system analysis include ls, chmod, chown, find, grep and ps these commands perform functions such as listing files and folders, viewing permissions, searching for files, and monitoring processes.

How are log files used in Linux security analysis?

Log files are critical because they record system activities and security events. In particular, the/var/log, auth.log, and other log files located in the syslog directory provide valuable information about authorization attempts and the status of system services.

What are SUID and SGID bits, and why are they important?

SUID (Set User ID) and SGID (Set Group ID) bits are used in Linux to set the execution permissions of files and directories on a user and group basis. These bits allow a file or directory to be executed with the permissions of a specific user or group, but if misconfigured, they can lead to security vulnerabilities.

What tools are recommended for rootkit and backdoor detection?

For rootkit and backdoor detection on Linux systems, tools such as chkrootkit and rkhunter are recommended. These tools can analyze hidden files, system calls, and modified system files to uncover suspicious behavior.

How are file permissions managed and analyzed in a Linux system?

File permissions in Linux are managed using the chmod and chown commands. The ls -l command displays file permissions. Careful management and monitoring of file permissions is extremely important for security.

How are advanced scanning tools used and what is their importance?

Advanced scanning tools analyze the system in depth to detect hidden files and permission changes. These tools use structural and heuristic techniques to identify threats on the system and help prevent security breaches.